How Phi-3-Vision-128K Enhances Document Processing with AI-Powered OCR

In the evolving landscape of artificial intelligence, the development of multimodal models is reshaping how we interact with and process data. One of the most groundbreaking innovations in this space is the Phi-3-Vision-128K-Instruct model—a cutting-edge, open multimodal AI system that integrates visual and textual information. Designed for tasks like Optical Character Recognition (OCR), document extraction, and comprehensive image understanding, Phi-3-Vision-128K-Instruct has the potential to revolutionize document processing, from PDFs to complex charts and diagrams.

In this article, we will explore the model’s architecture, primary applications, and technical setup and explore how it can simplify tasks like AI-driven document extraction, OCR, and PDF parsing.

What is Phi-3-Vision-128K-Instruct?

Phi-3-Vision-128K-Instruct is a state-of-the-art multimodal AI model in the Phi-3 model family. Its key strength lies in its ability to process textual and visual data, making it highly suitable for complex tasks requiring simultaneous interpretation of text and images. With a context length of 128,000 tokens, this model can handle large-scale document processing, from scanned documents to intricate tables and charts.

Trained on 500 billion tokens, including a mix of synthetic and curated real-world data, the Phi-3-Vision-128K-Instruct model utilizes 4.2 billion parameters. Its architecture includes an image encoder, a connector, a projector, and the Phi-3 Mini language model, all working together to create a powerful yet lightweight AI capable of efficiently performing advanced tasks.

Core Applications of Phi-3-Vision-128K-Instruct

Phi-3-Vision-128K-Instruct’s versatility makes it worthwhile across a range of domains. Its key applications include:

1. Document Extraction and OCR

The model excels in transforming images of text, like scanned documents, into editable digital formats. Whether it’s a simple PDF or a complex layout with tables and charts, Phi-3-Vision-128K-Instruct can accurately extract the content, making it a valuable tool for digitizing and automating document workflows.

2. General Image Understanding

Beyond text, the model can parse visual content, recognize objects, interpret scenes, and extract useful information from images. This ability makes it suitable for a wide array of image-processing tasks.

3. Efficiency in Memory and Compute-Constrained Environments

Phi-3-Vision-128K-Instruct is designed to work efficiently in environments with limited computational resources, ensuring high performance without excessive demands on memory or processing power.

4. Real-Time Applications

The model can reduce latency, making it an excellent choice for real-time applications, such as live data feeds, chat-based assistants, and streaming content analysis.

Getting Started with Phi-3-Vision-128K-Instruct

To harness the power of this model, you’ll need to set up your development environment. Phi-3-Vision-128K-Instruct is integrated into the Hugging Face transformers library, version 4.40.2. Make sure your environment has the following packages installed:

# Required Packages

flash_attn==2.5.8

numpy==1.24.4

Pillow==10.3.0

Requests==2.31.0

torch==2.3.0

torchvision==0.18.0

transformers==4.40.2

To load the model, update your transformers library and install it directly from the source:

pip uninstall -y transformers && pip install git+https://github.com/huggingface/transformers

Once set up, you can begin using the model for AI-powered document extraction and text generation.

Example Code for Loading Phi-3-Vision-128K-Instruct

Here’s a basic example in Python for initializing and making predictions using Phi-3-Vision-128K-Instruct:

from PIL import Image

import requests

from transformers import AutoModelForCausalLM, AutoProcessor

class Phi3VisionModel:

def __init__(self, model_id=”microsoft/Phi-3-vision-128k-instruct”, device=”cuda”):

self.model_id = model_id

self.device = device

self.model = self.load_model()

self.processor = self.load_processor()

def load_model(self):

return AutoModelForCausalLM.from_pretrained(

self.model_id,

device_map=”auto”,

torch_dtype=”auto”,

trust_remote_code=True

).to(self.device)

def load_processor(self):

return AutoProcessor.from_pretrained(self.model_id, trust_remote_code=True)

def predict(self, image_url, prompt):

image = Image.open(requests.get(image_url, stream=True).raw)

prompt_template = f”<|user|>\n<|image_1|>\n{prompt}<|end|>\n<|assistant|>\n”

inputs = self.processor(prompt_template, [image], return_tensors=”pt”).to(self.device)

output_ids = self.model.generate(**inputs, max_new_tokens=500)

return self.processor.batch_decode(output_ids, skip_special_tokens=True)[0]

phi_model = Phi3VisionModel()

image_url = “https://example.com/sample_image.png”

prompt = “Extract the data in json format.”

response = phi_model.predict(image_url, prompt)

print(“Response:”, response)

Testing OCR Capabilities with Real-World Documents

We ran experiments with various types of scanned documents to test the model’s OCR capabilities. For example, we used a scanned Utopian passport and a Dutch passport, each with different levels of clarity and complexity.

Example 1: Utopian Passport

The model could extract detailed text from a high-quality image, including name, nationality, and passport number.

Output:

{

“Surname”: “ERIKSSON”,

“Given names”: “ANNA MARIA”,

“Passport Number”: “L898902C3”,

“Date of Birth”: “12 AUG 74”,

“Nationality”: “UTOPIAN”,

“Date of Issue”: “16 APR 07”,

“Date of Expiry”: “15 APR 12”

}

Example 2: Dutch Passport

The model handled this well-structured document effortlessly, extracting all the necessary details accurately.

The Architecture and Training Behind Phi-3-Vision-128K-Instruct

Phi-3-Vision-128K-Instruct stands out because it can process long-form content thanks to its extensive context window of 128,000 tokens. It combines a robust image encoder with a high-performing language model, enabling seamless visual and textual data integration.

The model was trained on a dataset that included both synthetic and real-world data, focusing on a wide range of tasks such as mathematical reasoning, common sense, and general knowledge. This versatility makes it ideal for a variety of real-world applications.

Performance Benchmarks

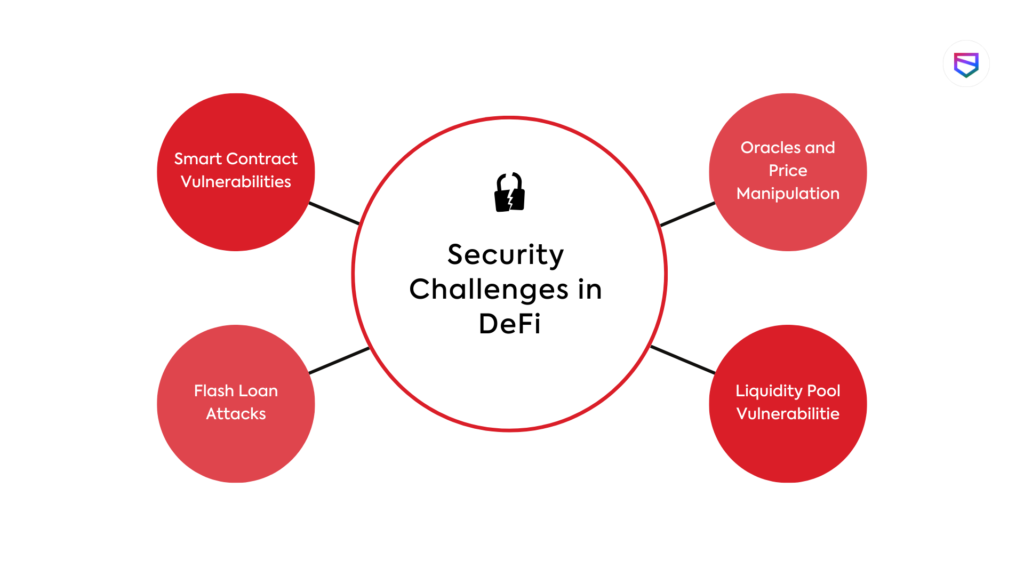

Phi-3-Vision-128K-Instruct has achieved impressive results on several benchmarks, particularly in multimodal tasks. Some of its highlights include:

The model scored 81.4% on the ChartQA benchmark and 76.7% on AI2D, making it one of the top performers in these categories.

Why AI-Powered OCR Matters for Businesses

AI-driven document extraction and OCR are transformative for businesses. By automating tasks such as PDF parsing, invoice processing, and data entry, businesses can streamline operations, save time, and reduce errors. Models like Phi-3-Vision-128K-Instruct are indispensable tools for digitizing physical records, automating workflows, and improving productivity.

Responsible AI and Safety Considerations

While Phi-3-Vision-128K-Instruct is a powerful tool, it is essential to be mindful of its limitations. The model may produce biased or inaccurate results, especially in sensitive areas such as healthcare or legal contexts. Developers should implement additional safety measures, like verification layers when using the model for high-stakes applications.

Future Directions: Fine-Tuning the Model

Phi-3-Vision-128K-Instruct supports fine-tuning, allowing developers to adapt the model for specific tasks, such as enhanced OCR or specialized document classification. The Phi-3 Cookbook provides fine-tuning recipes, making extending the model’s capabilities for particular use cases easy.

Conclusion

Phi-3-Vision-128K-Instruct represents the next leap forward in AI-powered document processing. With its sophisticated architecture and powerful OCR capabilities, it is poised to revolutionize the way we handle document extraction, image understanding, and multimodal data processing.

As AI advances, models like Phi-3-Vision-128K-Instruct are leading the charge in making document processing more efficient, accurate, and accessible. The future of AI-powered OCR and document extraction is bright, and this model is at the forefront of that transformation.

FAQs

1. What is the main advantage of Phi-3-Vision-128K-Instruct in OCR? Phi-3-Vision-128K-Instruct can process both text and images simultaneously, making it highly effective for complex document extraction tasks like OCR with tables and charts.

2. Can Phi-3-Vision-128K-Instruct handle real-time applications? Yes, it is optimized for low-latency tasks, making it suitable for real-time applications like live data feeds and chat assistants.

3. Is fine-tuning supported by Phi-3-Vision-128K-Instruct? Absolutely. The model supports fine-tuning, allowing it to be customized for specific tasks such as document classification or improved OCR accuracy.

4. How does the model perform with complex documents? The model has been tested on benchmarks like ChartQA and AI2D, where it demonstrated strong performance in understanding and extracting data from complex documents.

5. What are the responsible use considerations for this model? Developers should be aware of potential biases and limitations, particularly in high-risk applications such as healthcare or legal advice. Additional verification and filtering layers are recommended.