Blockchain Whitepaper – A Comprehensive Overview for Investors and Enthusiasts – Web3oclock

Table of Contents:

Importance of whitepapers in blockchain projects

Standard Whitepaper Components

How to read and analyze a whitepaper

Have you ever wondered how a blockchain project starts? Before a new blockchain takes off, a critical document that lays the groundwork is the whitepaper. Imagine you’re about to invest in or use a new blockchain technology; you want to know if it’s reliable, right? That’s where the Blockchain Whitepaper comes in. It’s like a blueprint that helps us understand everything from how the technology works to what problems it solves. Without Blockchain Whitepaper, making informed decisions would be a lot harder. So, why do we need a blockchain whitepaper? Let’s dive in and see how it clears up confusion and sets the stage for success.

A Blockchain Whitepaper is an authoritative document that explains the technical details, purpose, and structure of a blockchain project. It serves as a comprehensive guide for potential investors, developers, and users to understand how the project works and what problem it aims to solve. Blockchain Whitepapers often include information on the project’s architecture, consensus mechanism, tokenomics, and use cases.

Blockchain Whitepapers are typically published during the early stages of a project to attract investors, developers, and users by providing transparency and building trust in the project’s goals. It also helps set the foundation for future development and community engagement.

Why do you have to use Blockchain Whitepapers? Why Blockchain Whitepapers are mandatory to use? Here’s why whitepapers are indispensable for blockchain projects:

Importance of whitepapers in blockchain projects:

1. Clear Project Vision:

A whitepaper outlines the primary goals and objectives of a blockchain project, giving potential investors, developers, and users a clear understanding of what the project aims to achieve. It provides a framework for the project’s mission, use cases, and long-term impact.

2. Technical Blueprint:

It details the technical architecture, protocols, and underlying technology of the blockchain. This includes information on how the project will function, the consensus mechanism, the role of nodes, security features, and other technical specifications.

3. Problem-Solution Explanation:

Blockchain whitepapers usually begin by highlighting specific issues in the market or industry and explaining how the proposed blockchain project will solve these problems. This problem-solution structure helps in positioning the project as a needed solution and attracts support.

4. Transparency and Trust:

Transparency is a crucial aspect of blockchain, and whitepapers contribute to this by offering an open, detailed account of the project. Investors and stakeholders can evaluate the project’s credibility and legitimacy based on the information provided, building trust in its operations.

5. Investor Attraction:

A well-crafted Blockchain Whitepaper is often aimed at attracting investors by showcasing the potential return on investment (ROI), the tokenomics (economics of the blockchain’s native token), and the growth prospects. It helps investors understand how the project will generate value.

6. Guidance for Developers:

Blockchain Whitepapers serve as a guide for developers who want to contribute to the project. By providing technical details and explaining the codebase, consensus algorithms, and APIs, it becomes easier for developers to join and build on the project.

7. Legal and Regulatory Clarity:

While not always emphasized, some blockchain whitepapers include a section on compliance with legal and regulatory frameworks. This gives stakeholders confidence that the project operates within the legal bounds of the jurisdictions in which it intends to operate.

8. Marketing Tool:

The whitepaper is also a strategic marketing document that helps spread awareness of the project. It is often used to explain the unique selling points (USPs) of the blockchain and differentiate it from competitors in the market.

9. Community Engagement:

Whitepapers can spark interest within the blockchain community and initiate discussions. Engaged communities are vital for the long-term success of blockchain projects, and a comprehensive whitepaper helps in building a strong, dedicated user base.

10. Roadmap and Development Timeline:

A blockchain whitepaper often includes a detailed roadmap, highlighting the milestones for the project. This gives stakeholders a sense of the timeline for the development of various phases, such as token distribution, platform launches, and feature rollouts.

Most Blockchain Whitepapers follow a similar structure, designed to explain everything from the project’s vision to the technical workings. Let’s break down the standard components of a blockchain whitepaper.

The Components of Blockchain Whitepaper:

1. Introduction:

The introduction sets the stage by explaining the problem the Blockchain Whitepaper project is solving. It highlights the main goals of the project and why it’s relevant in today’s world.

2. Market Overview:

This section gives an overview of the current market or industry. It may discuss challenges in the existing system and why Blockchain Whitepaper can provide a better solution. It helps readers understand the project’s potential.

3. Problem Statement:

Here, the whitepaper dives deeper into the specific issues the project aims to address. This could be anything from a lack of transparency in an industry to slow transaction speeds or security issues.

4. Solution:

This is the heart of the Blockchain Whitepaper, where the project introduces its solution—often in the form of a new blockchain protocol or platform. It explains how the technology will work and how it will solve the problems mentioned earlier.

5. Technology and Architecture:

For more tech-savvy readers, this section breaks down the blockchain’s technical aspects. It might include details about consensus mechanisms (how decisions are made on the blockchain), smart contracts, cryptography, and network architecture.

6. Tokenomics (Token Economy):

Many blockchain projects have their token, and this section explains how Blockchain Whitepaper will be distributed and used. It talks about the total supply, how tokens will be allocated, and their role in the ecosystem.

7. Roadmap:

A roadmap lays out the project’s development timeline. It shows what’s been achieved so far and the plans for future updates or milestones. This helps investors and users see where the project is headed.

8. Team:

The Blockchain Whitepaper usually includes a section about the project’s team members. It highlights their experience and expertise, building trust that they have the skills to make the project successful.

9. Legal and Regulatory Information:

To ensure compliance with laws and regulations, this section might outline the legal structure of the project, especially if it’s dealing with cryptocurrency. Blockchain Whitepaper reassures readers that the project is aware of any legal implications.

10. Conclusion:

Finally, the conclusion wraps up the document by summarizing the project’s vision and inviting readers to support the project, either through investing, using the platform, or becoming a part of the community.

Reading a blockchain whitepaper can seem overwhelming, but it doesn’t have to be. Here are five easy steps to help you understand and analyze any whitepaper effectively:

How to Read and Analyze a Blockchain Whitepaper: 5 Simple Steps

1. Start with the Problem and Solution:

Begin by looking at the problem the project is trying to solve. Does the Blockchain Whitepaper clearly explain a real-world issue? Then, move on to the solution. Make sure the project’s approach is practical and innovative. If the problem seems vague or the solution unclear, that could be a red flag.

2. Understand the Technology:

You don’t need to be a tech expert but try to get a general idea of how the technology works. Look for sections that explain the blockchain’s structure, consensus mechanism (like Proof of Work or Proof of Stake), and any unique features. If it’s too complicated or lacks detail, it might be worth questioning the project’s feasibility.

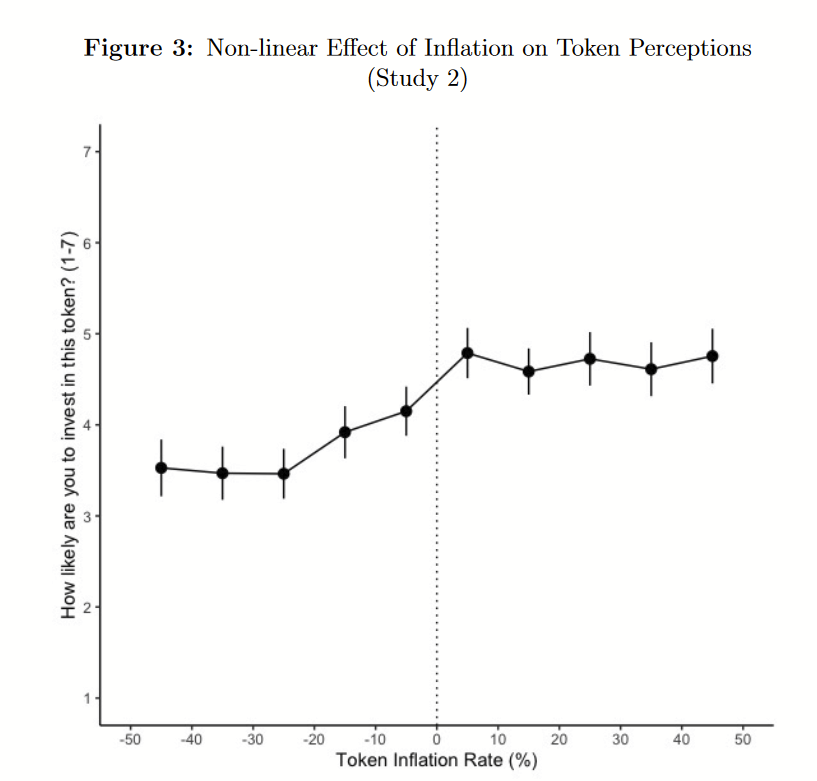

3. Check the Tokenomics:

If the project involves a cryptocurrency, pay attention to the tokenomics. This includes how tokens will be distributed, their role in the project’s ecosystem, and whether they have real utility. A well-designed token economy is crucial for long-term success, so make sure it’s fair and sustainable.

4. Evaluate the Team and Roadmap:

A strong team with relevant experience is essential. Review the team’s background and check if they have the expertise needed to make the project a reality. Also, look at the roadmap, does it have realistic milestones? An overly ambitious or vague timeline might suggest the project is more hype than substance.

5. Look for Legal and Regulatory Compliance:

Blockchain projects often operate in a gray area when it comes to regulations. Make sure the Blockchain Whitepaper addresses any legal aspects, such as how they’ll comply with local laws. Projects that ignore regulatory concerns may run into trouble later, putting investors at risk.

Here are some of the most well-known and influential Blockchain Whitepapers that have set a standard in the industry.

Top Blockchain Whitepapers:

1. Bitcoin Whitepaper (2008):

Title: Bitcoin: A Peer-to-Peer Electronic Cash System.

Author: Satoshi Nakamoto

Why It’s Important: This is the whitepaper that started it all. Satoshi Nakamoto’s 9-page document laid the foundation for decentralized digital currency and blockchain technology. It clearly explains the concept of a peer-to-peer electronic payment system without relying on a third party like a bank.

Key Focus: Solves the double-spending problem and introduces blockchain and proof of work (PoW) for security and consensus.

2. Ethereum Whitepaper (2013):

Title: A Next-Generation Smart Contract and Decentralized Application Platform.

Author: Vitalik Buterin

Why It’s Important: Ethereum’s whitepaper introduced the idea of smart contracts and decentralized applications (dApps), expanding the blockchain’s potential far beyond digital currency. It laid the groundwork for creating a programmable blockchain that could run various decentralized applications.

Key Focus: Introduces smart contracts, decentralized applications (dApps), and Ethereum’s blockchain.

3. Polkadot Whitepaper (2016):

Title: Polkadot: Vision for a Heterogeneous Multi-Chain Framework.

Author: Gavin Wood

Why It’s Important: Polkadot’s whitepaper envisions an interconnected web of blockchains, allowing different blockchains to communicate with each other. It introduces a concept known as “parachains” and a unique consensus model.

Key Focus: Cross-chain interoperability, governance, scalability, and security.

4. Cardano Whitepapers (2017):

Title: Ouroboros: A Provably Secure Proof-of-Stake Blockchain Protocol & Why We Need Cardano: A Third-Generation Blockchain

Author: IOHK (Input Output Hong Kong)

Why It’s Important: Cardano’s whitepapers focus on the scientific approach behind their blockchain, introducing a proof-of-stake (PoS) consensus mechanism. The project is known for being research-driven and peer-reviewed.

Key Focus: Secure proof-of-stake consensus, scalability, sustainability, and governance.

5. Libra (Diem) Whitepaper (2019):

Title: An Introduction to Libra.

Author: Facebook (Now Meta) and the Libra Association

Why It’s Important: Though it faced regulatory challenges and rebranded to Diem, the Libra whitepaper proposed a global digital currency backed by a reserve of assets. It aimed to create a financial system that could be used globally, especially for those without access to banking services.

Key Focus: Global financial inclusion, stablecoin backed by a reserve, secure and scalable blockchain.

6. Filecoin Whitepaper (2017):

Title: Filecoin: A Decentralized Storage Network

Author: Protocol Labs

Why It’s Important: Filecoin’s whitepaper proposes a decentralized storage network where users can rent out their unused storage space. It’s a groundbreaking example of how blockchain can be applied outside finance.

Key Focus: Decentralized data storage, incentives for file storage and retrieval, proof-of-replication, and proof-of-space-time.

7. Solana Whitepaper (2017):

Title: Solana: A New Architecture for a High-Performance Blockchain

Author: Anatoly Yakovenko

Why It’s Important: Solana’s whitepaper focuses on solving the scalability problem faced by most blockchains. It introduces the Proof of History (PoH) consensus model, which significantly speeds up transaction processing.

Key Focus: High throughput, low latency, and scalable blockchain solutions.

Blockchain Whitepapers are crucial for understanding the essence and potential of a blockchain project. They provide clarity on the problem being solved, the technology behind it, and the team driving the vision. By carefully reading through the problem statement, technology details, tokenomics, team credentials, and legal considerations, you can gauge the project’s legitimacy and prospects.

For further updates and in-depth insights, subscribe to our newsletter! We would love to hear your thoughts.